Agathon is an ancient Greek term for good or virtuous. Each week we meet and discuss what is good and how to do the most good.

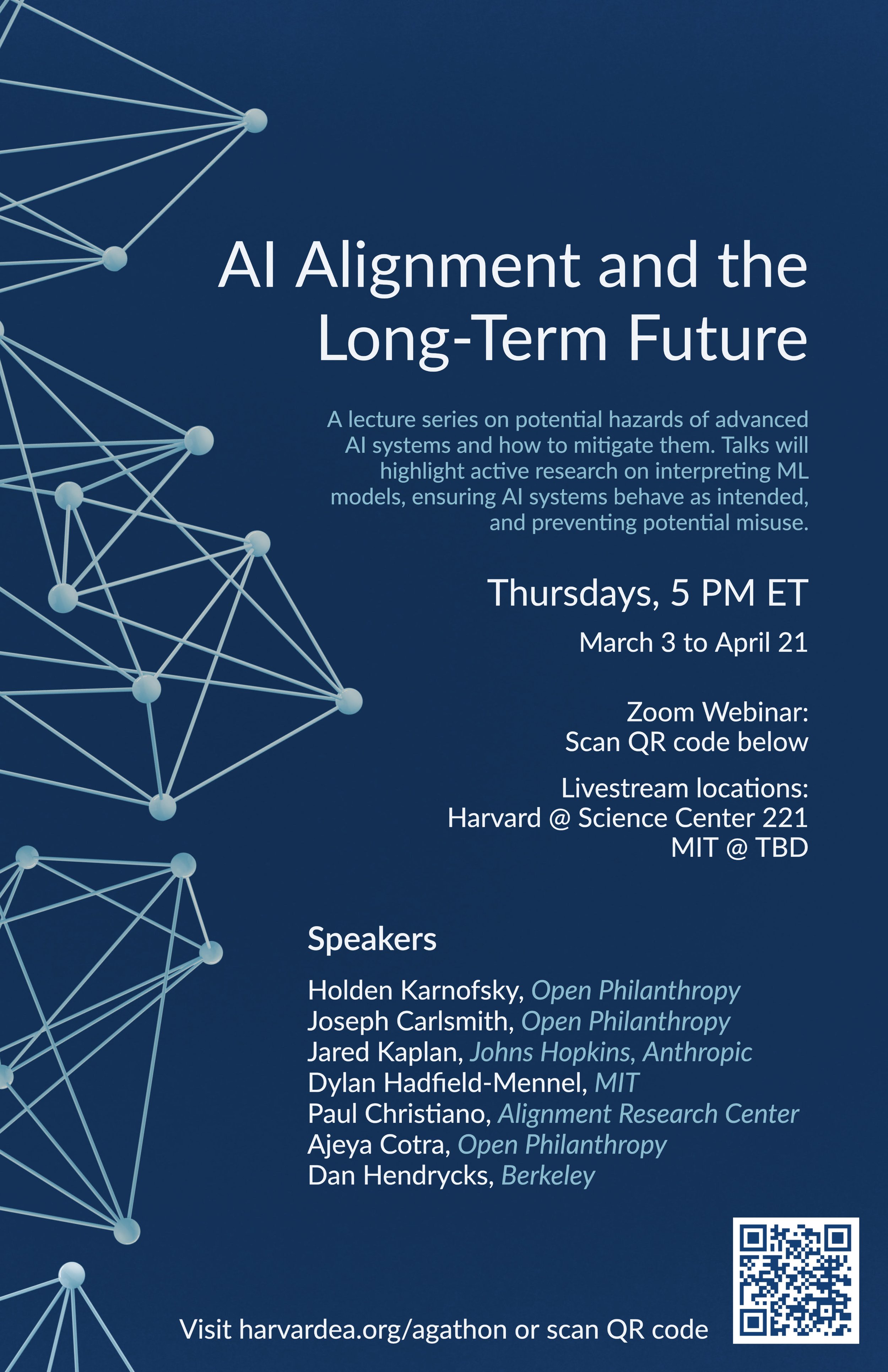

AI Alignment and the Long-Term Future Speaker Series

03/03-05/05 Spring 2022

RSVP Here

Zoom Link

Feedback Form Here

With the advancement of Machine Learning research and the expected appearance of Artificial General Intelligence in the near future, it becomes an extremely important problem to positively shape the development of AI and to align AI values to that of human values. In this speaker series, we bring state-of-art research on AI alignment into focus for audiences interested in contributing to this field. We will kick off the series by closely examining the potential risks engendered by AGIs and making the case for prioritizing the mitigation of risks now. Later on in the series, we will hear about more technical talks on concrete proposals for AI alignment.

You can participate in the talks in person at Harvard and MIT, as well as remotely through the webinar by RSVP (link above). All talks happen at 5 pm EST (2 pm PST, 10 pm GMT) on Thursdays. Dinner is provided for in-person venues.

Harvard Location: Science Center 221

MIT Location: 3-333

FAQs:

1. Would the talks be recorded?

Yes, they are recorded by default and will be published here per the speaker’s permission after the talk.

2. May I stream this to my local group?

Please do.

3. Where can I read more about the state-of-art thinking on Alignment?

A good place to catch up with active researchers is the Alignment Forum.

4. I want to work on alignment. Where do I start?

There are a lot of opportunities at different organizations! A sketch of career paths is summarized here.

Schedule

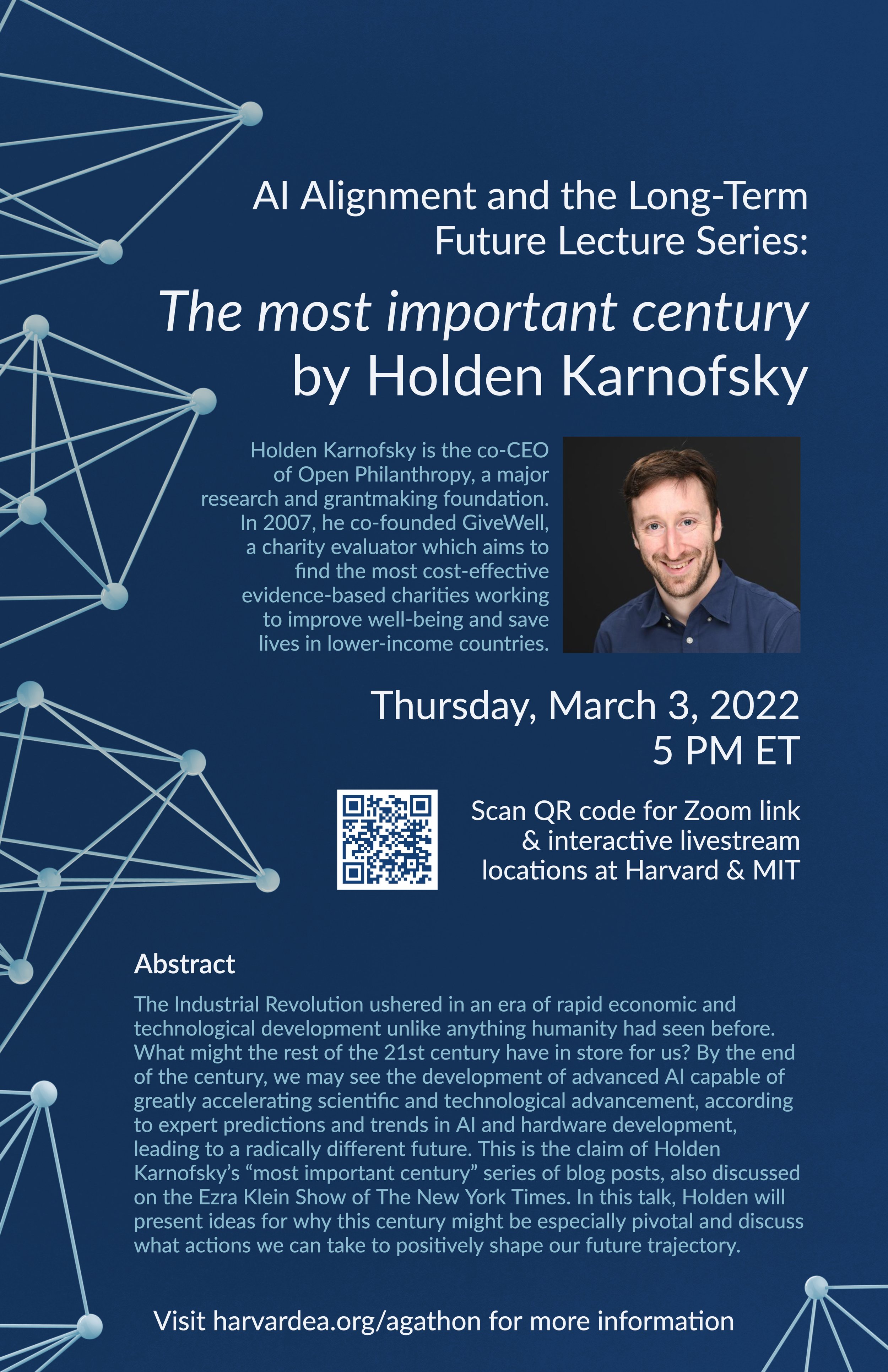

Thursday, March 3, 2022

Holden Karnofsky, Open Philanthropy

“The most important century”

The most important century blog Recording

The Industrial Revolution ushered in an era of rapid economic and technological development unlike anything humanity had seen before. What might the rest of the 21st century have in store for us? By the end of the century, we may see the development of advanced AI capable of greatly accelerating scientific and technological advancement, according to expert predictions and trends in AI and hardware development, leading to a radically different future. This is the claim of Holden Karnofsky's "most important century" series of blog posts, also discussed on the Ezra Klein Show of The New York Times. In this talk, Holden will present ideas for why this century might be especially pivotal and discuss what actions we can take to positively shape our future trajectory.

Holden Karnofsky is the co-chief executive director of Open Philanthropy, a major research and grantmaking foundation. In 2007, he co-founded GiveWell, a charity evaluator which aims to find the most cost-effective evidence-based charities working to improve well-being and save lives in lower-income countries.

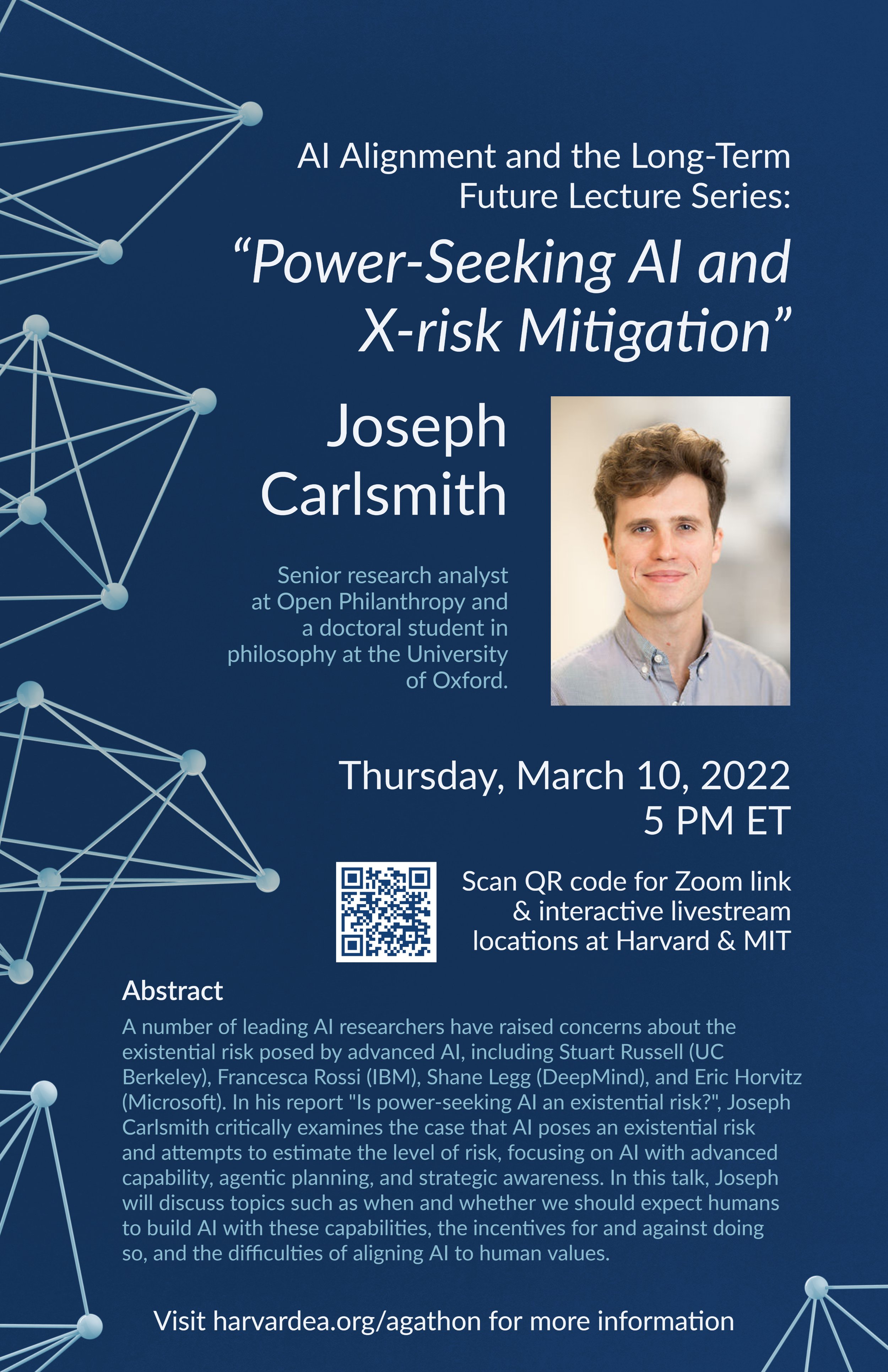

Thursday, March 10, 2022

Joseph Carlsmith, Open Philanthropy

“Power-Seeking AI and X-risk Mitigation”

A number of leading AI researchers have raised concerns about the existential risk posed by advanced AI, including Stuart Russell (UC Berkeley), Francesca Rossi (IBM), Shane Legg (DeepMind), and Eric Horvitz (Microsoft). In his report "Is power-seeking AI an existential risk?", Joseph Carlsmith critically examines the case that AI poses an existential risk and attempts to estimate the level of risk, focusing on AI with advanced capability, agentic planning, and strategic awareness. In this talk, Joseph will discuss topics such as when and whether we should expect humans to build AI with these capabilities, the incentives for and against doing so, and the difficulties of aligning AI to human values.

Joseph Carlsmith is a senior research analyst at Open Philanthropy and a doctoral student in philosophy at the University of Oxford.

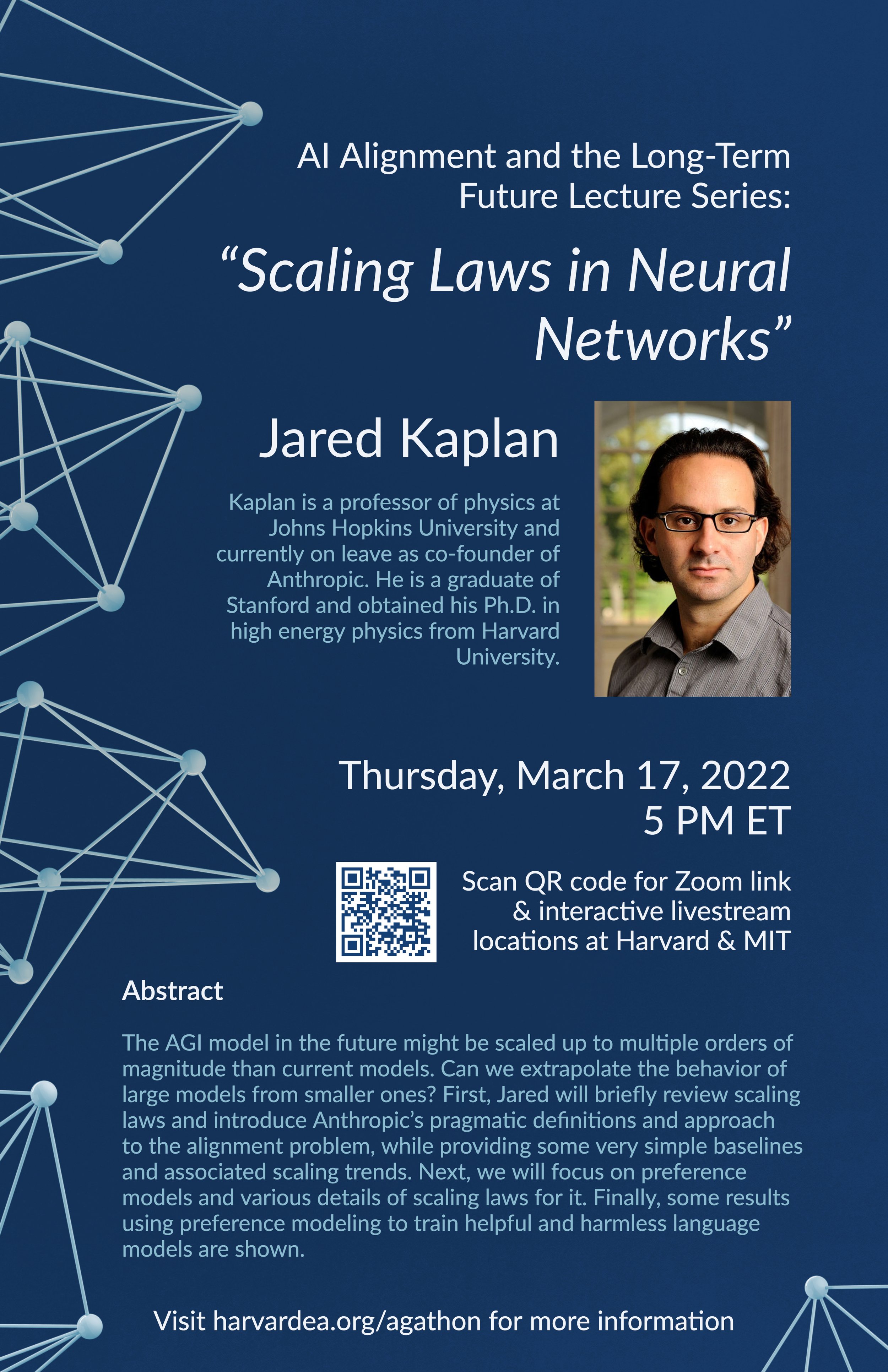

Thursday, March 17, 2022

Jared Kaplan, Johns Hopkins / Anthropic

“A General Language Assistant as a Laboratory for Alignment”

Scaling laws for training AI models

The AGI model in the future might be scaled up to multiple orders of magnitude than current models. Can we extrapolate the behavior of large models from smaller ones? First, Jared will briefly review scaling laws and introduce Anthropic’s pragmatic definitions and approach to the alignment problem, while providing some very simple baselines and associated scaling trends. Next, we will focus on preference models and various details of scaling laws for it. Finally, some results using preference modeling to train helpful and harmless language models are shown.

Kaplan is a professor of physics at Johns Hopkins University and currently on leave as co-founder of Anthropic. He is a graduate of Stanford and obtained his Ph.D. in high energy physics from Harvard University.

Tuesday, March 22, 2022 (In person @Harvard)

Jacob Steinhardt, UC Berkeley

“Forecasting and Aligning AI”

Modern ML systems sometimes undergo qualitative shifts in behavior simply by "scaling up" the number of parameters and training examples. Given this, how can we extrapolate the behavior of future ML systems and ensure that they behave safely and are aligned with humans? I'll argue that we can often study (potential) capabilities of future ML systems through well-controlled experiments run on current systems, and use this as a laboratory for designing alignment techniques. I'll also discuss some recent work on "medium-term" AI forecasting.

Jacob Steinhardt is Assistant Professor in the Department of Statistics at UC Berkeley, and previously worked at OpenAI and Open Philanthropy.

Thursday, April 7, 2022

Paul Christiano, Alignment Research Center

“Eliciting Latent Knowledge”

When we train an ML system, it may build up latent “knowledge” about the world that is instrumentally useful for achieving a low loss. For example, a video model may infer the positions and velocities of objects in order to predict the next frame. I’ll argue that eliciting this knowledge would go much of the way towards safely deploying powerful AI, even if we could only do so in the most “straightforward” cases where there is little doubt about what the model knows or how to describe that knowledge to a human. I’ll describe a naive training strategy to get the model to honestly answer questions using all of its knowledge and explain why I expect that strategy to fail for sufficiently powerful systems. Then I'll discuss a few more sophisticated alternatives which may also fail, and finally outline the approach we are currently taking at ARC to try to resolve this problem in the worst case

Paul Christiano is co-founder and researcher at Alignment Research Center, and previously worked

in the AI safety team at Open AI.

Thursday, April 14, 2022

Buck Shlegeris, Redwood Research

“Our Current Directions in Mechanistic Interpretability Research”

It's quite hard to define the goal of interpretability research. There's a really intuitively reasonable long-term goal: we'd like to know whether our AI is giving us answers because they're true or because it wants us to trust it so that it can murder us later, and even though it seems difficult or impossible to distinguish between these possibilities just based on the input-output behavior of the model, it might be possible to distinguish between them based on understanding the internal properties of the model. But how do we pick short-term research directions that are leading in the right direction for this goal? In this talk, we'll present a way of thinking about the goal of interpretability research that might lead us in a good direction, and we'll talk about what results we've gotten so far.

Buck Shlegeris is co-founder and CTO of Redwood Research, a non-profit institute dedicated to solving the alignment problem through innovative ML engineering strategies.

Thursday, April 21, 2022

Dan Hendrycks, UC Berkeley

“Pragmatic AI Safety”

AI systems are rapidly increasing in size, are acquiring new capabilities, and are increasingly deployed in high-stakes settings. As with other powerful technologies, safety for AI should be a leading research priority. In response to emerging safety challenges in AI, such as those introduced by recent large-scale models, I provide a pragmatic new roadmap for AI Safety. I'll discuss how researchers can shape the process that will lead to strong AI systems and steer that process in a safer direction.

Dan is a graduate student in Computer Science at UC Berkeley and has been a driving force in building AI safety as an academic field.

Thursday, April 28, 2022

Ajeya Cotra, Open Philanthropy

“AI Timeline and Alignment Risk”

Ajeya will discuss a framework for estimating when we might be able to afford very powerful AI systems, by comparing the resources required to train such a system with various 'biological anchors.

Ajeya Cotra is a senior research analyst at Open Philanthropy. Previously she obtained BA in computer science from UC Berkeley.

Thursday, May 5, 2022

Dylan Hadfield-Menell, MIT (In-Person @ MIT IHQ 3rd Floor)

“Incompleteness, Artificial Intelligence, and the Problem with Proxies”

One of the most useful developments in the field of artificial intelligence is the use of incentives to program target behaviors into systems. This creates a convenient way for system designers to specify useful, goal-driven behaviors. However, it also creates issues due to the inherent incompleteness of these goals: we often observe that optimizing a proxy for one's intended goal eventually leads to counterintuitive and undesired results. This is sometimes ascribed to 'Goodhart's Law' that "once a target becomes a measure, it ceases to be a good target". In this talk, I will present theoretical results that characterize situations where Goodhart's Law holds and discuss approaches to manage this incompleteness. I will conclude with a discussion of how incomplete specifications are managed in recommendation systems and propose research directions for safe AI systems that have affordances for updating and maintaining aligned incentives.

Hadfield-Menell is a Professor of EECS at MIT and previously received his Ph.D. in Computer Science from UC-Berkeley. His research focuses on the value alignment problem in artificial intelligence and aims to help create algorithms that pursue the intended goals of their user.